What your Nonprofit Should (And Shouldn't) Use AI For

If you’re a nonprofit professional, you likely already use artificial intelligence (AI) in your operations and are considering expansion. In fact, studies show that 82% of nonprofits now use AI in some capacity.

While the potential is significant, AI isn’t a perfect fit for every scenario. The key is knowing where AI strengthens your nonprofit’s work and where it may create risks or diminish authentic connection. That’s why having a structured approach to AI use is so important—yet, less than 25% of nonprofits have a formal AI use plan in place.

This guide will break down where AI tools can be a powerful ally for fundraising, grant writing, and digital transformation, and where relying on them can undermine trust, introduce bias, or drain resources.

What AI should be used for

Automating repetitive tasks

The best use of AI is to handle routine tasks so your team has more bandwidth for human-centric responsibilities. For instance, your team can use AI to:

- Save time on scheduling and coordination. AI tools can handle repetitive tasks like sorting donor lists and automating meeting reminders, freeing staff from administrative bottlenecks.

- Reduce manual data entry errors. By automating data capture and updates within CRMs, AI minimizes mistakes that can happen when staff enter information manually, ensuring donor records are accurate and reliable.

- Free up staff for strategic work. When routine tasks (e.g., volunteer management and report generation) are streamlined, nonprofit professionals can focus on higher-value activities such as building relationships with donors, crafting campaigns, or shaping long-term strategy.

To maximize these gains, identify which routine tasks consume the most staff time and prioritize those for AI support. Pair automation with clear oversight responsibilities so efficiency doesn’t compromise accuracy or accountability.

Strengthening fundraising outreach

Many nonprofit fundraising tools already use AI—you might just not realize it. In fact, AI has been used for years in nonprofit tech, enabling predictive donor scoring, automated email optimization, and grant management systems that flag compliance risks.

Now that AI is more advanced, it’s even more useful for supporting essential fundraising efforts. For example, AI can:

- Identify donor giving patterns with predictive analytics

- Optimize timing of campaigns and appeals

- Support donor segmentation to personalize outreach

All of these abilities help provide insights that staff can adapt into authentic, mission-aligned content and messaging. However, you shouldn’t rely on AI to create all of your messaging. We’ll explore that distinction later.

Improving grant writing and reporting

With at least 30% of nonprofits relying on grants to fund their missions, the quality of each application can make or break critical programs. Refining grant applications is essential for highlighting compelling insights that make a solid case for support. AI can lighten the burden of drafting grant materials, allowing staff to focus on strategy, strengthen relationships with funders, and add the polish that helps proposals stand out and secure funding.

Here are some use cases for AI during the grant application process:

- Generate first drafts to reduce staff workload

- Suggest how to improve clarity and formatting

- Provide structure for reports while staff refine messaging

- Compile and analyze data for reporting

As you integrate AI into your grant process, create a workflow that pairs staff subject-matter expertise with AI drafting, so outputs are both efficient and funder-ready. Build in human checkpoints where team members review language for accuracy, alignment with funder priorities, and the personal touch that wins support.

What AI shouldn’t be used for

It’s important to note that while these risks can significantly impact your nonprofit, the right strategies can mitigate them.

Completely managing donor relationships

The foundation of the nonprofit world is human connection. When you use AI to communicate with donors, it can suggest appropriate wording based on its training data, but it cannot understand the depth of your relationships or translate that into truly meaningful messaging. Also, AI might miss critical context that informs donor relationships, such as body language or the full contents of a conversation.

Here are some other risks of overreliance on AI for donor communications:

- Undermining your brand voice. Your nonprofit has built connections with supporters based on the specific ways you address problems and communicate impact. Suddenly switching to an AI voice can catch supporters off guard and jeopardize the trust you’ve built with them.

- Alienating supporters. 82% of people are at least somewhat skeptical of AI. Regardless of your nonprofit’s intentions, using AI may lead some donors to lose trust in your team and turn them off from supporting your efforts in the future.

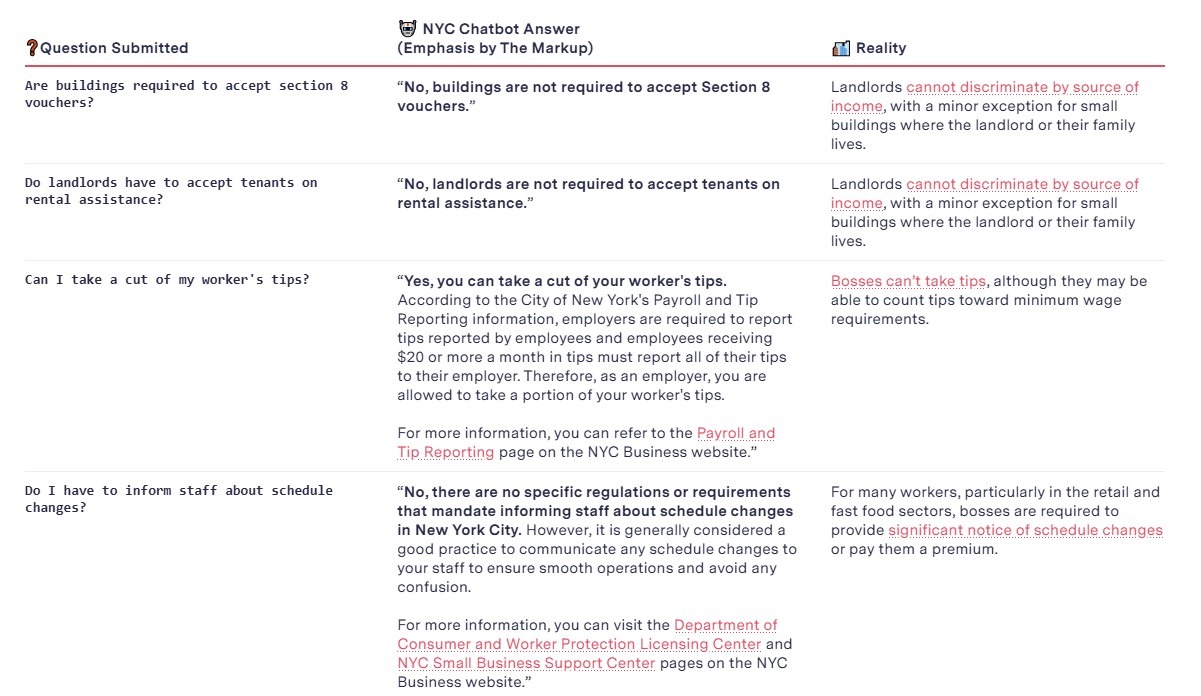

- Biased or incorrect information. While it’s improving every day, AI is far from perfect. At times, it can produce biased outputs or generate inaccurate details (often referred to as “hallucinations”). Left unchecked, these errors can slip into donor communications, risking your nonprofit’s credibility and damaging the trust you’ve built with supporters. Look at this real example of an AI hallucination:

Alt text: An example of AI hallucinating and providing incorrect information.

You might notice that these risks tend to boil down to maintaining donor trust in your nonprofit. That’s why clear communication is essential when integrating AI into your operations. Heller Consulting recommends creating “a resource on your website explaining your values regarding AI, detailing how you use it, and (most importantly) how you understand that it isn’t a replacement for all nonprofit operations.”

Managing donor data without safeguards

Donor information is one of your nonprofit’s most valuable assets — and one of the most sensitive. Feeding this data directly into third-party AI tools without oversight creates significant risks around privacy, security, and compliance. While AI can help analyze patterns in donor behavior, nonprofits must take extra care to protect supporter information.

Here are some of the risks of handling donor data with AI tools:

- Violating privacy regulations. Laws like GDPR and CCPA place strict requirements on how personal data can be collected, stored, and shared. Uploading donor records into an AI tool that doesn’t comply could result in penalties and damage your nonprofit’s reputation.

- Exposing sensitive information. Many generative AI tools store prompts or inputs. This means that once you upload donor names, giving histories, or contact details, that information could be retained or even used to train the system. This loss of control increases the risk of sensitive data being accessed by the general public outside of your nonprofit.

- Increasing vulnerability to cyberattacks. Concentrating sensitive donor data in third-party systems creates new entry points for hackers. If an AI vendor experiences a breach, bad actors could get their hands on your supporters’ personal information and use it for nefarious purposes.

However, these risks can be mitigated by strategic tactics. For example, set clear data governance policies, limit the type of information entered into AI systems, and vet vendors for strict security and privacy standards. You might also choose to consult with a nonprofit tech expert to ensure you aren’t breaching compliance and following best practices.

Adopting AI is the current focus of many digital transformation efforts. To launch your strategy on the right path, establish internal policies for AI use, train staff on how and when to apply AI responsibly, and regularly audit outputs for accuracy and bias. Pair these safeguards with open communication to donors about their values, and AI can become a tool that amplifies your mission without undermining trust.

Get the latest information on major gift fundraising, donor psychology and more. Straight to your inbox.